In this post I’ll focus on Demo papers and tools that could be relevant in research and development.

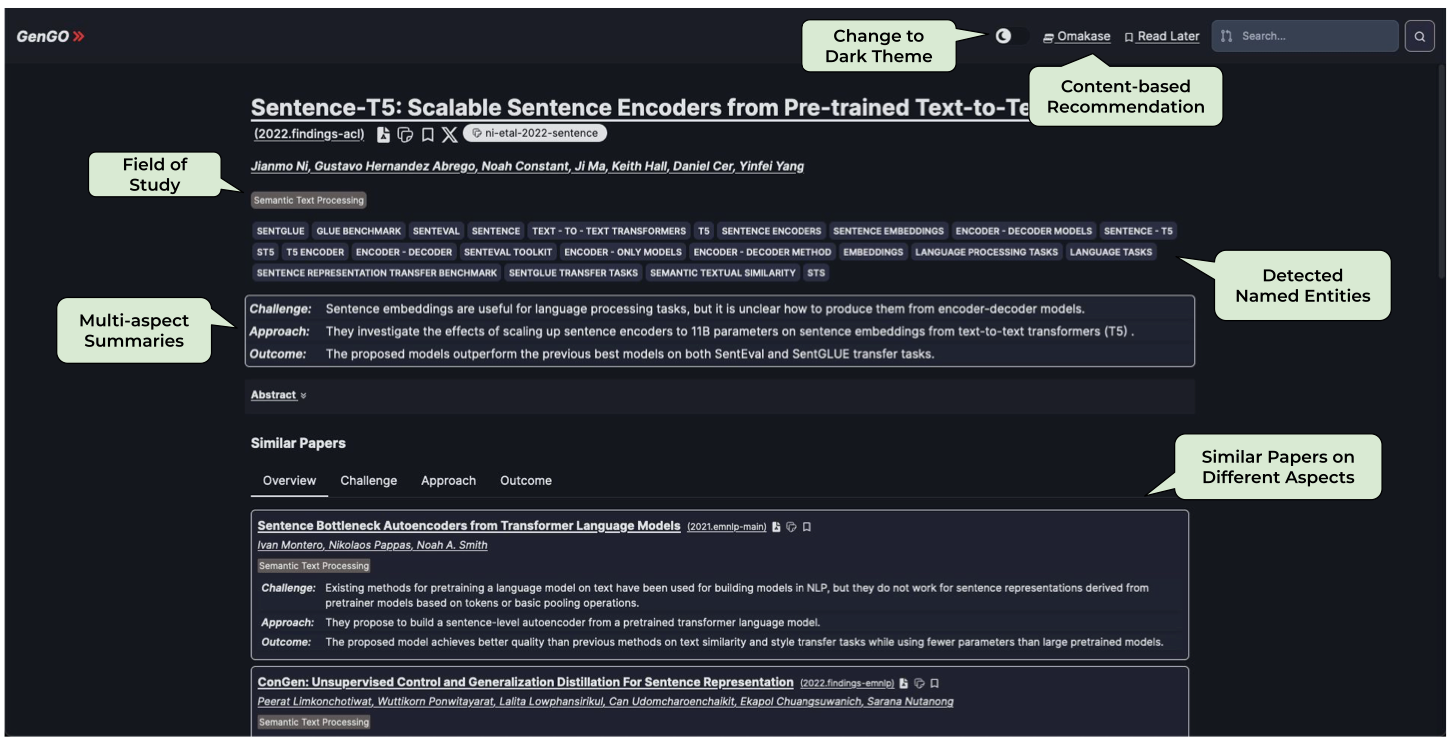

GenGO: ACL Paper Explorer with Semantic Features

Motivation

The researchers aimed to create a more efficient way for exploring NLP papers, as the growing number of publications makes it hard for researchers to keep up with new developments.

The researchers aimed to create a more efficient way for exploring NLP papers, as the growing number of publications makes it hard for researchers to keep up with new developments.

Method

They developed GenGO, a system that enriches paper data with multi-aspect summaries, extracted entities, and semantic features for better search and exploration. It includes features like semantic search and multi-aspect paper summaries.

Results

GenGO successfully indexed over 30,000 ACL papers and helps users to explore them.

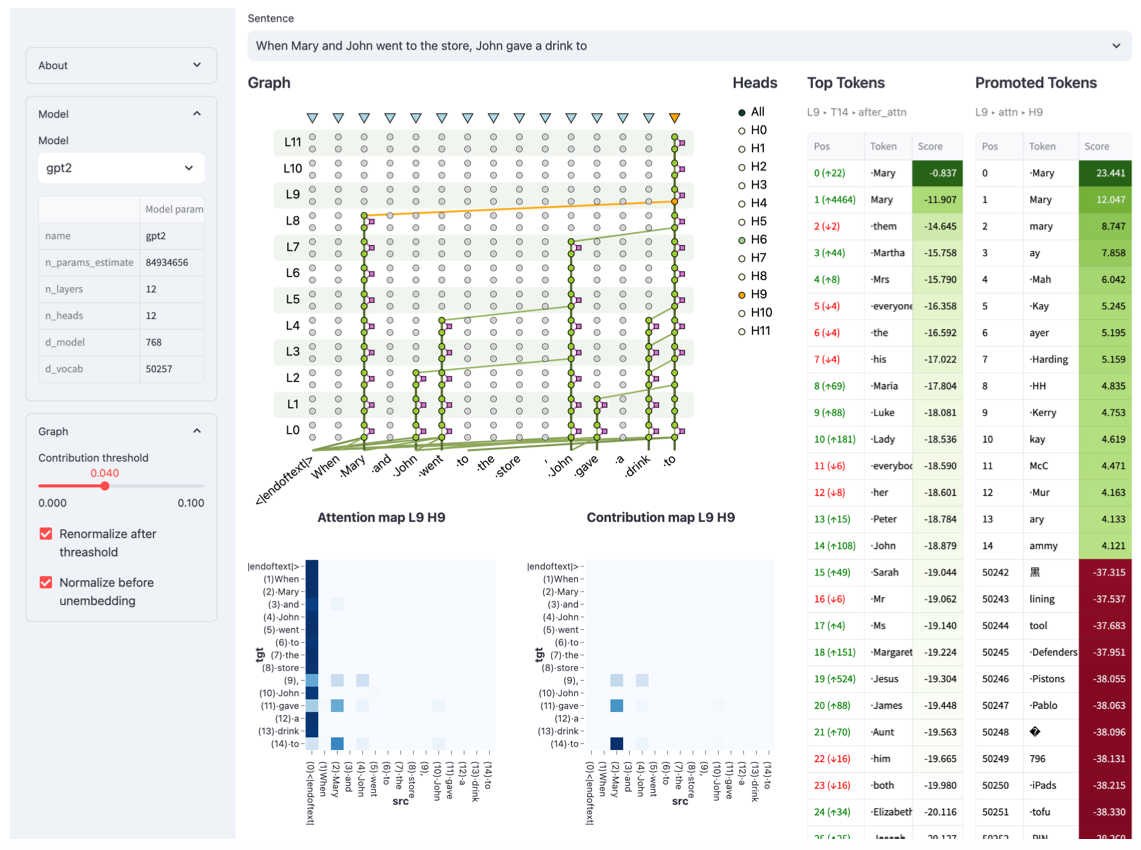

LM Transparency Tool: Interactive Tool for Analyzing Transformer Language Models

Motivation

The researchers aimed to improve transparency and interpretability in Transformer language models by developing a tool that can trace model predictions back to fine-grained components such as attention heads and neurons.

The researchers aimed to improve transparency and interpretability in Transformer language models by developing a tool that can trace model predictions back to fine-grained components such as attention heads and neurons.

Method

They introduced the LM Transparency Tool, an interactive toolkit that visualizes the important parts of the prediction process and allows users to attribute changes in model behavior to individual components, like attention heads and feed-forward neurons.

Results

The tool enables users to analyze language models more efficiently, tracing the information flow and understanding which components contribute to specific predictions, making model analysis faster.

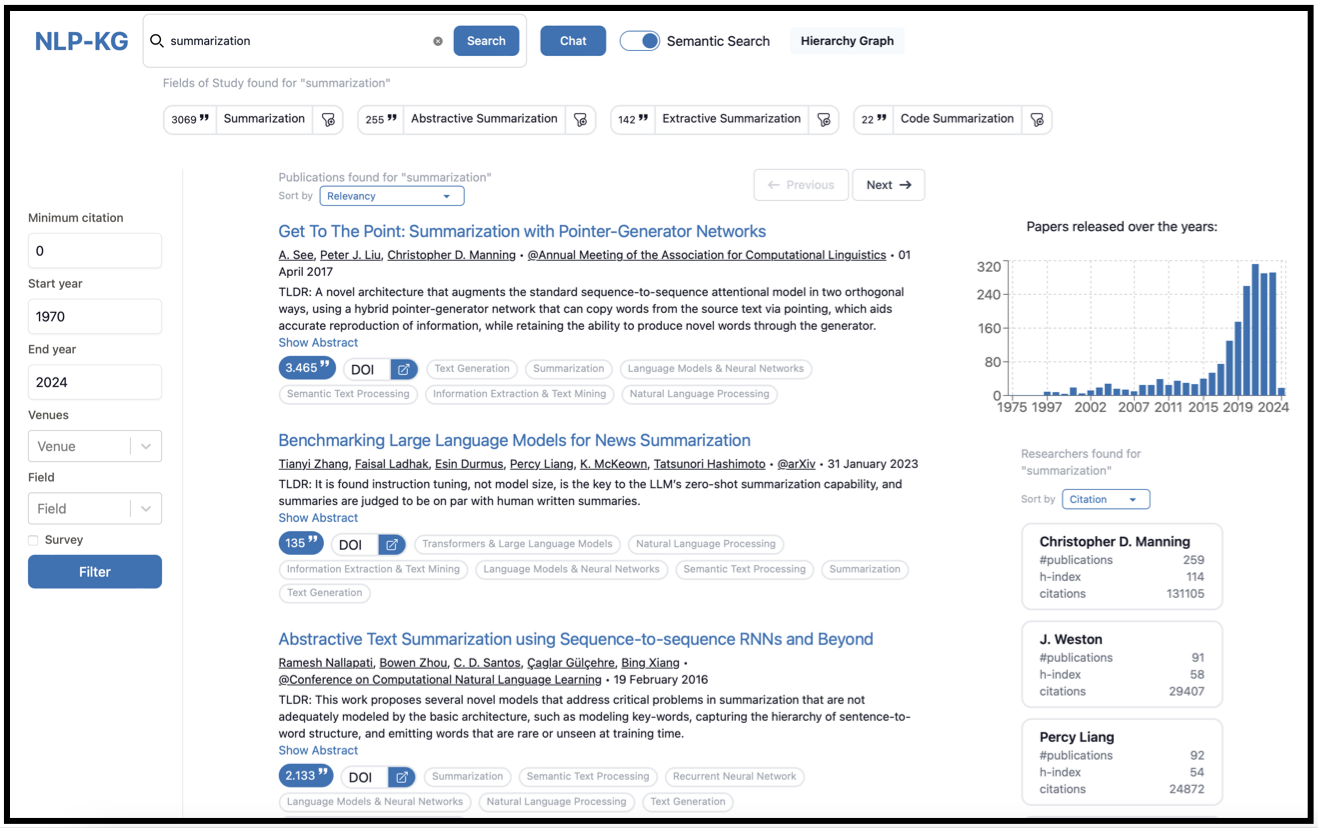

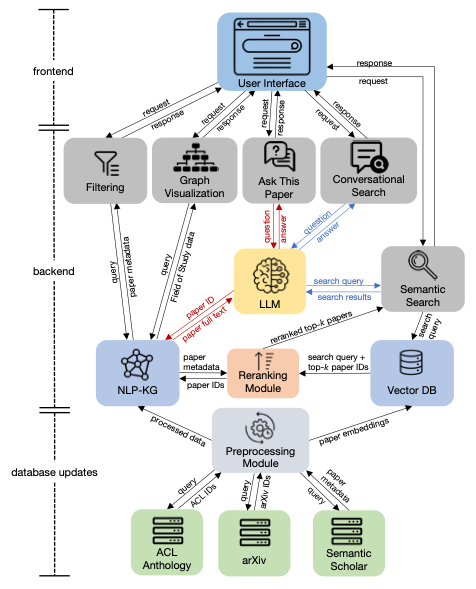

NLP-KG: A System for Exploratory Search of Scientific Literature in Natural Language Processing

Motivation

The researchers aimed to enhance exploratory search for NLP scientific literature, as traditional search systems are optimized for keyword-based lookups and limit exploration in unfamiliar fields.

The researchers aimed to enhance exploratory search for NLP scientific literature, as traditional search systems are optimized for keyword-based lookups and limit exploration in unfamiliar fields.

Method

They developed NLP-KG, a system that uses a knowledge graph, semantic search, and a conversational interface to help users explore NLP literature, providing features like survey filtering, graph visualization, and the ability to ask detailed questions about specific papers.

They developed NLP-KG, a system that uses a knowledge graph, semantic search, and a conversational interface to help users explore NLP literature, providing features like survey filtering, graph visualization, and the ability to ask detailed questions about specific papers.

Results

NLP-KG allows for efficient exploration of NLP research.

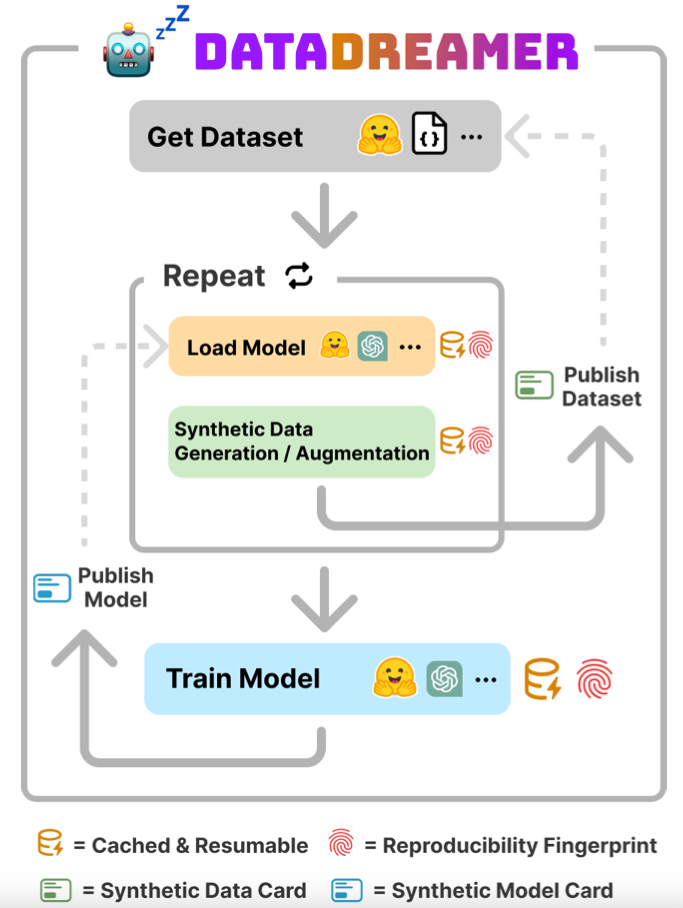

DataDreamer: A Tool for Synthetic Data Generation and Reproducible LLM Workflows

Motivation

The researchers wanted to address challenges in reproducibility and open science when working with large language models (LLMs). These challenges include issues like model scale, closed-source limitations, and the complexity of LLM-based workflows.

Method

They introduced DataDreamer, an open-source Python library designed to simplify LLM workflows, such as synthetic data generation, fine-tuning, and task evaluation. It supports reproducibility through features like caching, session management, and reproducibility fingerprints.

They introduced DataDreamer, an open-source Python library designed to simplify LLM workflows, such as synthetic data generation, fine-tuning, and task evaluation. It supports reproducibility through features like caching, session management, and reproducibility fingerprints.

Results

DataDreamer enables researchers to chain tasks, automate workflow reproducibility, and make LLM research more accessible. It provides significant ease for multi-stage LLM workflows, reducing complexity and improving experimentation.

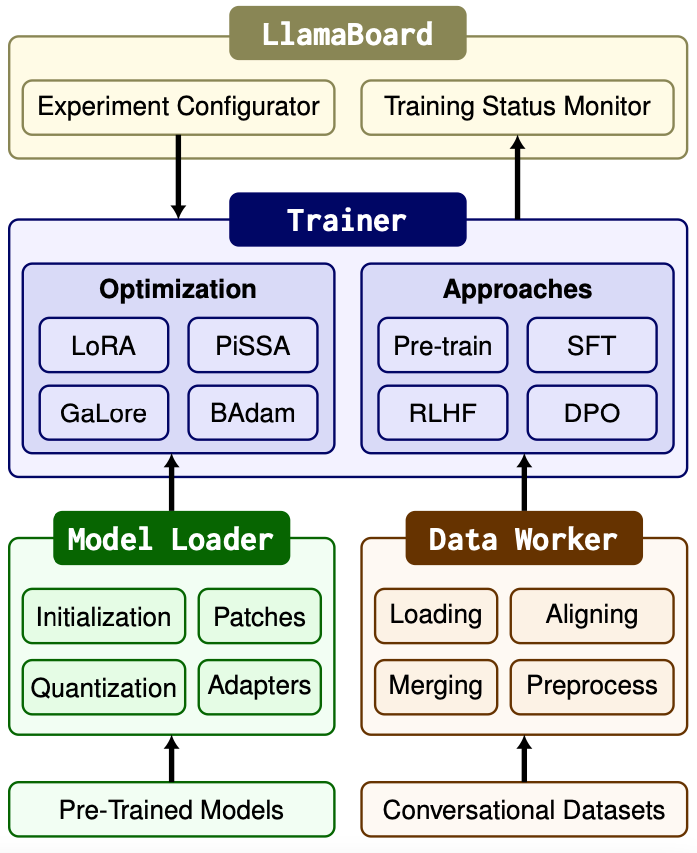

LlamaFactory: Unified Efficient Fine-Tuning of 100+ Language Models

Motivation

The researchers aimed to simplify and unify the process of fine-tuning LLMs, which can be complex due to the variety of methods and models.

Method

They developed LLaMA-Factory, a framework that integrates various efficient fine-tuning techniques like LoRA, QLoRA, and GaLore. It provides a user-friendly interface called LlamaBoard for codeless fine-tuning and evaluation of LLMs.

They developed LLaMA-Factory, a framework that integrates various efficient fine-tuning techniques like LoRA, QLoRA, and GaLore. It provides a user-friendly interface called LlamaBoard for codeless fine-tuning and evaluation of LLMs.

Results

LLaMA-Factory could help you in model fine-tuning and tracking the performance.

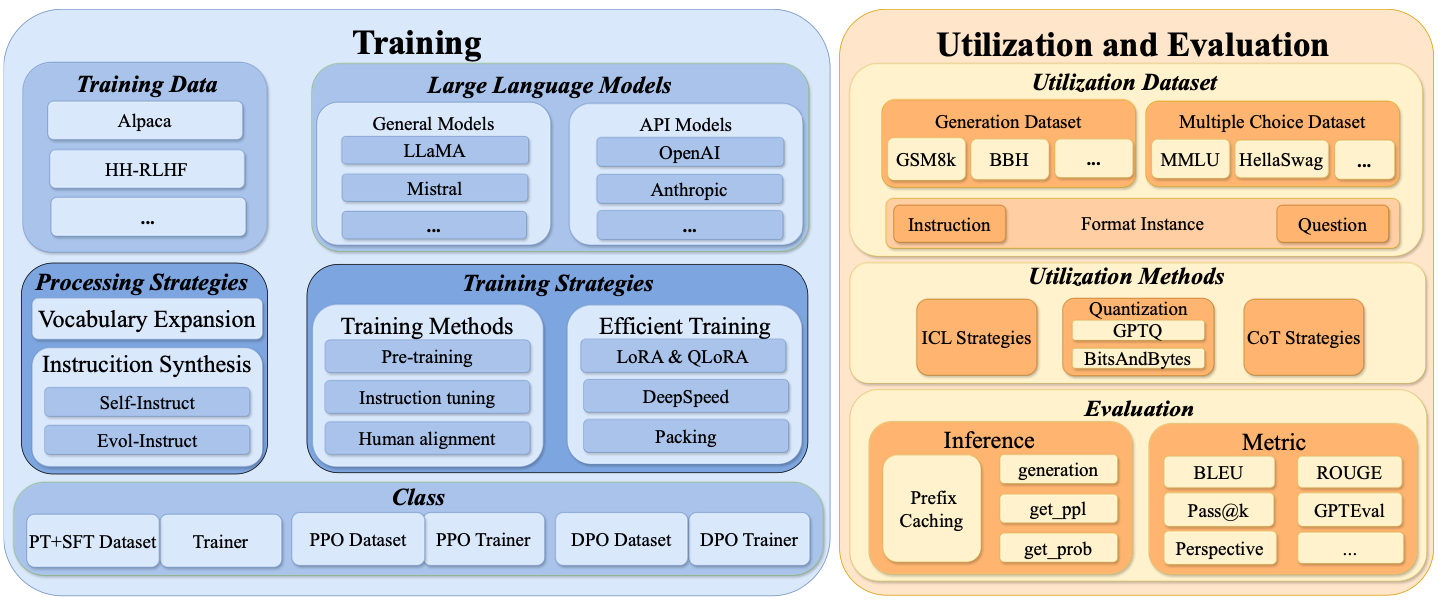

LLMBox: A Comprehensive Library for Large Language Models

Motivation

The researchers aimed to simplify the development and evaluation of LLMs by providing a unified library. This is important because current systems often lack standardization and require significant resources to reproduce results.

Method

They developed LLMBox, a library that offers a unified data interface for training, inference, and evaluation of LLMs. It supports efficient training strategies, a wide range of tasks, and models, while also integrating features like quantization and human alignment tuning.

They developed LLMBox, a library that offers a unified data interface for training, inference, and evaluation of LLMs. It supports efficient training strategies, a wide range of tasks, and models, while also integrating features like quantization and human alignment tuning.

Results

LLMBox reduces the complexity of working with LLMs for researchers.